Alignment vs. creativity in generative code development

The pros and cons of deterministic code generation and planning for some experiments

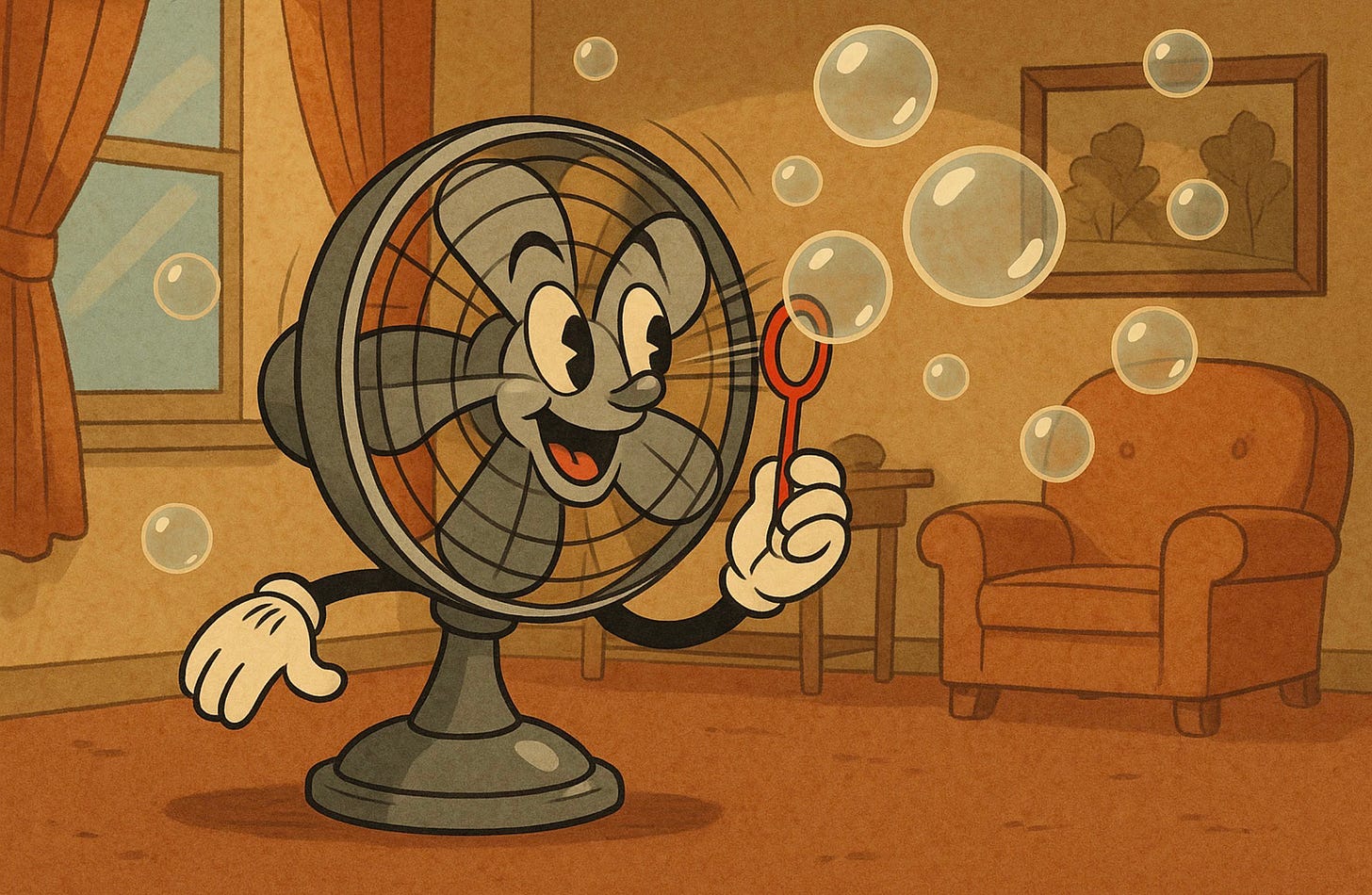

By now, most of us have tried generating images using text-to-image LLMs. Every model I’ve used shares one behavior: submitting the same prompt multiple times produces substantially different images each time. Here’s a quick example from a prompt I gave ChatGPT’s image generator:

Give me an image of a character that is a desktop-style fan with arms that uses its own operational fan blades to blow bubbles using a bubble wand. Bubbles should be in the air around the character. Style should be 40s-50s cartoon animation. Background should be interior of a period appropriate home, rendered with colors that match the style of that period of animation.

While the generated images both follow my prompt’s guidance, I did not specify every detail to be generated. Each missing element—the number of bubbles, the fan’s direction, the furniture’s color—and every ambiguity—what exactly does ‘40s/’50s animation style look like?—requires the LLM to guess and imagine how those parts of the image should be rendered.

It’s that “guessing” and “imagining” I’d like to explore in a code generation context in a few articles. Throughout these articles, I’ll refer to this guessing and imagining behavior as creativity.

Describing an LLM as creative is controversial, even threatening, especially when discussing LLM integration into industries driven by artistry and human expression. For now, I want to sidestep those considerations and focus on creativity in a code generation context.

How important is creativity for successful AI code generation? Could LLM creativity actually be counterproductive in coding tasks such as new feature generation and code maintenance?

Why deterministic LLM output could be useful

When code generation is less deterministic and more creative, generating the same codebase twice produces different function names, variable names, file structures, and even logic. Even with identical prompts and context, random seeds and uncontrolled tool responses cause the LLM to make different choices each time.

The cartoon demo in the intro illustrates this: two identical prompts produce distinct images of bubble-blowing fans.

Software engineers rely on deterministic code compilation and project building. Especially with resource-constrained targets like microcontrollers, developers expect the same toolset and unchanged codebase to produce identical code every time they build the project.

This deterministic output is essential for debugging, maintaining standards compliance, and enabling reliable firmware updates in the field.

Generating reliably deterministic output from AI-assisted code generation tools could provide similar benefits. In a prompt-first development workflow, deterministic code output would enforce codebase stability, helping with review, regression testing, and diffing. Adding new features, rolling back portions of the codebase, and partial regeneration of code all become safer and less disruptive.

As LLMs begin writing code for embedded applications, deterministic output could eventually enable code generation for real-time operations where tight timing must be maintained.

Still, I can’t help but feel we lose more than we gain if we eliminate creativity from an LLM entirely. An LLM without creativity is basically a natural language compiler—a useful tool, but one that doesn’t take full advantage of an LLM’s capabilities.

Alignment

On the spectrum of LLM output, creativity’s opposite is alignment. LLM alignment provides guardrails and ensures adherence to safety norms and constraints. It also applies to the training necessary to develop capabilities for successfully completing tasks like writing code. Alignment enables determinism.

How can a developer achieve alignment in LLM output? Besides setting the LLM output temperature to 0, controlling random seeds, and choosing the right model for a project, the level of determinism in code generation is a function of context. The more specific the context the developer provides, the more deterministic the output.

Next steps

In the next few articles, I’ll explore how LLM code generation varies when building the same project with different amounts of context. I’ll experiment across a spectrum—from loose “vibe coding” to rigidly defined software development practices. This means adjusting the detail in PRDs, architecture documents like API contracts, and the prompts I give the LLM. I’ll also need a standard process to measure the qualitative and quantitative differences from build to build.

I believe software teams will develop strategies to deliberately balance creativity and alignment in code-generating LLMs throughout a project’s lifecycle. Hopefully, my experiments over the next few weeks will help illustrate:

How much an LLM’s output varies from generation to generation due to ambiguities and missing context

How developers can curate context to balance alignment and creativity in LLM output

Note: In these articles, my thoughts on alignment and creativity are informed in part by a book I’m reading, Creative Machines: AI, Art, and Us.

This is a very good topic I’ve never thought of before. Deterministic generation is really tempting, because it would let us store the whole project as a prompt (PRD), but I agree that creativity is also very much needed. What would make sense to me is an iterative approach where both is used: prompt → deterministic generation → code → review, debug, creative generation → new, improved prompt → deterministic generation → new, improved code → etc.

In the end, this is almost what we already do with AI-assisted programming: there is a human reviewer, who discovers the ambiguities in the PRD and improves it to get better code.

Wouldn’t this approach unify the benefits of both alignment and creativity?