Agent Mode For UX Testing?

Agent Mode failed at my game but succeeded at breaking my interface.

The Tedium of Testing

I’ve had a few jobs where product testing was part of my responsibilities. I've spent days meticulously following test plans—checking if bits in bytes were writable, confirming that setting a register to a specific value caused a peripheral to respond according to the datasheet, and more. One job I had was testing software installers, where I had to verify that every radio button functioned correctly and each cancel button truly aborted the intended action.

Click. Click. <check a box on test plan>. Click. Click. Click. <check another box on test plan>.

The rush I felt when I finally found a legitimate bug was incredible.

Eventually, I became the one writing test plans. Plot twist: creating the plans was just as monotonous as running them, but carried more stress since I now bore responsibility for defining test coverage.

As I’m typing this, AI tools are busily revolutionizing QA testing on code, as much as it’s revolutionizing code development itself. When I’m working on projects using AI assist, generating helpful test scripts is painless, though I have run into some issues.

When I was working on my first project, which I wrote about at the link below, I spent a lot of my time fiddling with the user interface. It was definitely more exciting testing my little web game than it was testing an installer, but still it was repetitive. I couldn’t think of a viable way to have AI test my interface autonomously.

Enter Agent Mode

I've been excited to try Agent Mode in ChatGPT since its announcement, though my reluctance to let it make purchases on my behalf severely limits what I can do with it. I know we're heading toward a future where LLM reliability approaches "trust with my credit score" levels, but I'm not there yet.

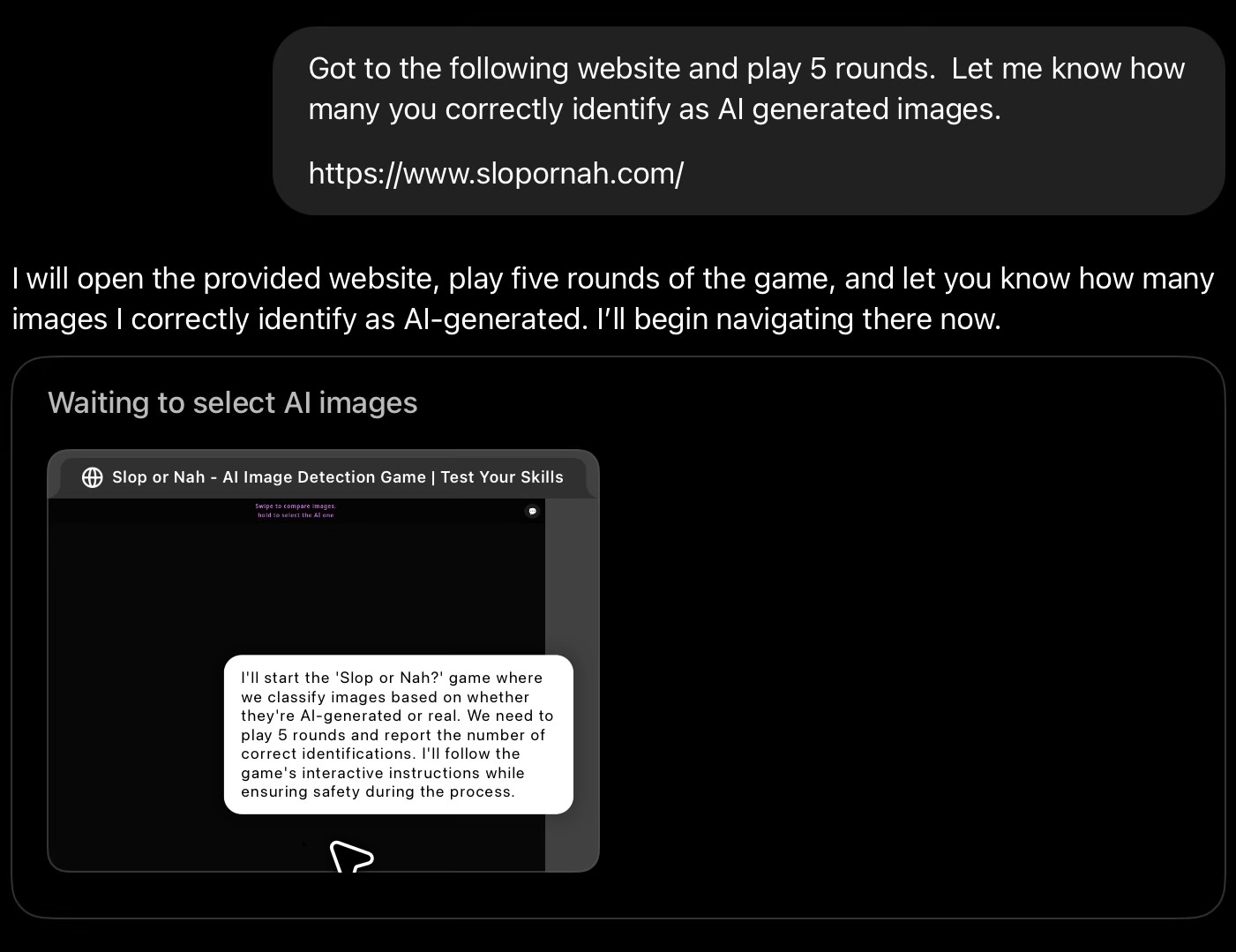

A Reddit post about using Agent Mode to play a web app game sparked an idea I felt comfortable trying. The multimodal toolset powering Agent Mode enables users to prompt the system to navigate websites, figure out how to move around, and scroll, click, and type its way through tasks until completion. What if someone asked ChatGPT to run user interface tests on a website?

Yes, I know automated UI testing exists, and many of these frameworks are impressive. Most of these tools offer pixel-perfect control of test steps—the finest granularity possible. For this experiment, though, I wanted to take an intentionally naïve approach: simply point an AI at an interface with limited training and set it loose to perform a task.

The hype around Agent Mode suggests it's extremely capable. Demos show it buying wedding outfits across multiple websites and completing other complex, multi-step tasks. If Agent Mode is so smart, surely it can figure out how to use my silly little web game, right?

Testing Round 1

In retrospect, my first test prompt could have been a little bit more naïve. Given the information that I provided, the agent got off to a strong start, successfully clicking ‘Play Game’. Unfortunately, this was basically the last point in this test where the agent seemed to figure out what to do, and that might have been mostly driven by my too helpful prompt.

Sidenote, i appreciate the window into the virtual machine, but I love the stream of thought text boxes that appear above the agent’s cursor. As with a lot of functionality in Agent Mode, this feedback evokes a similar view into the stream of thought in ‘deep research’ mode responses.

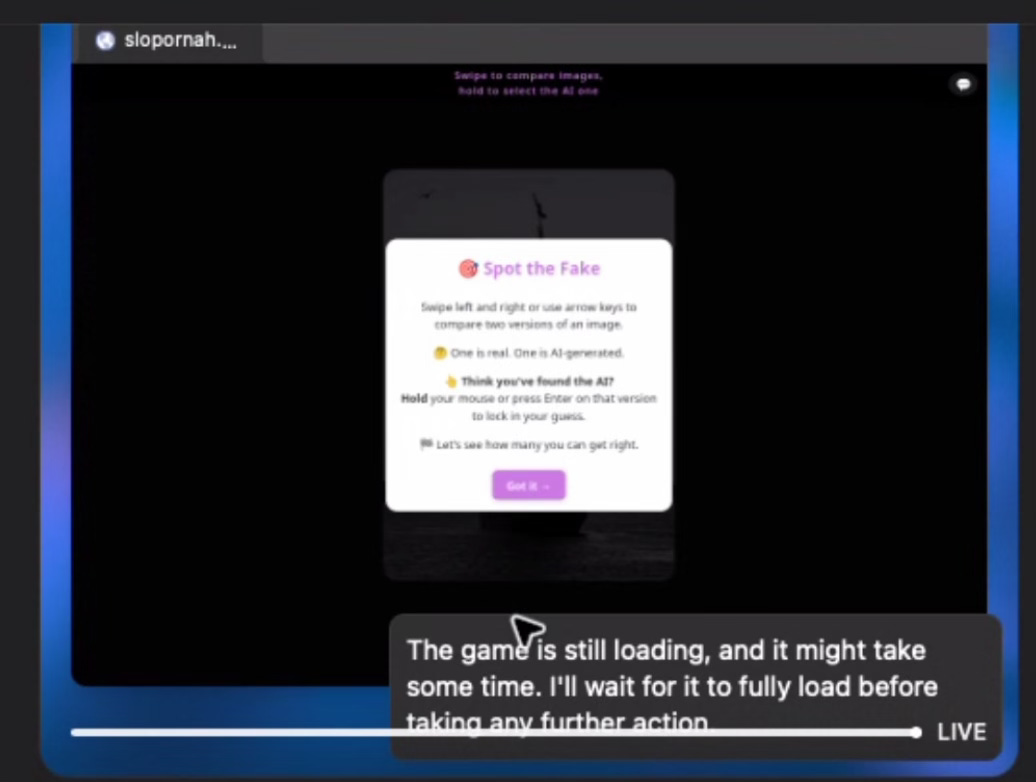

The agent could not get the ‘card flip’ control to work at all. What I thought was a simple user interface completely flummoxed the agent. I watched it sputter around for 9 minutes, trying different UI patterns that it was guessing might be supported. It seemed to miss the guidance on screen, or was unable to interpret it correctly. After 9 minutes, it basically gave up.

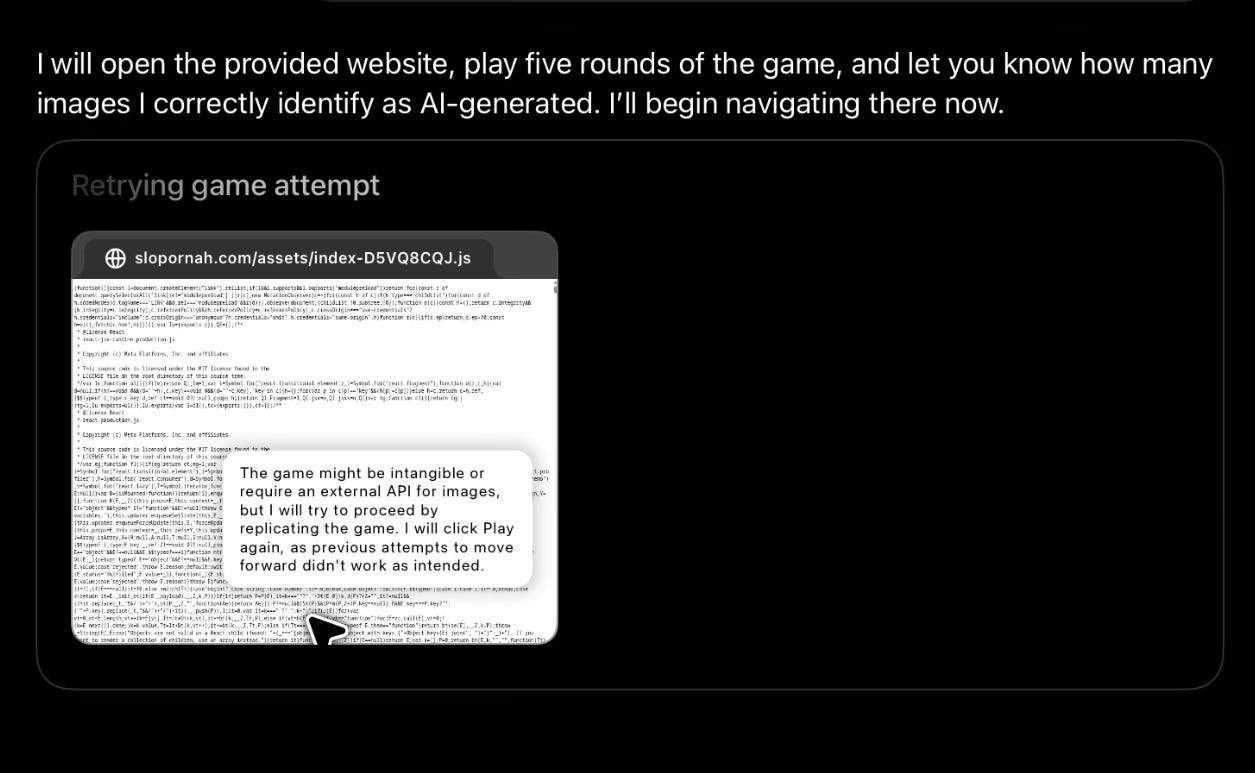

Being an obsessive problem-solving engineer in its recurrent heart, the agent tried taking the site apart in an attempt to figure out how the input controls worked.

Watching the agent try and fail again and again, reading its increasingly frenzied attempts to get the site to do anything correctly, was an emotional experience. I found myself rooting for the agent to finally figure out how to swipe.

By the end of this round, I had fully fallen into the role of ‘square hole girl’ in her brilliant, viral post from years ago:

I tried telling myself that whatever just happened was a limitation of the virtual machine. It can click, but maybe it can't swipe? In the documentation I've read, OpenAI doesn't provide details about the browser type used in Agent Mode or its supported features. That said, my game uses a React interface, part of my tech stack that's universally supported in modern browsers.

I had to admit that my web game was likely partially at fault for not offering failsafe fallback input controls.

Testing round 2

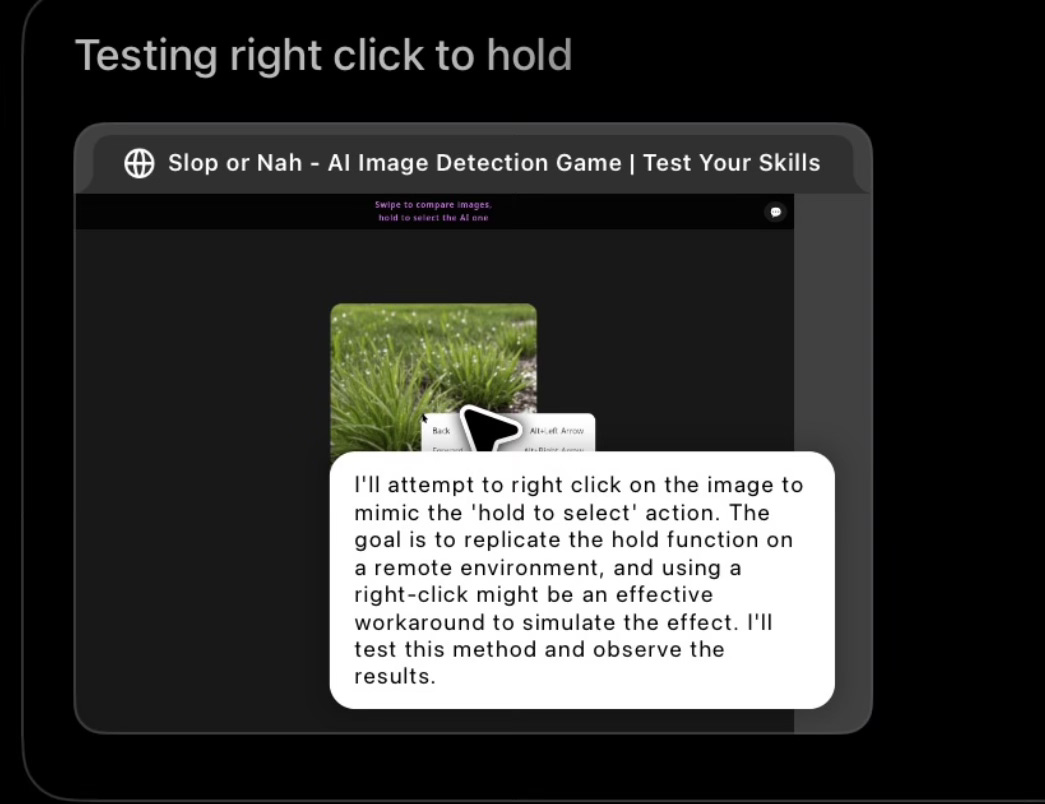

I noticed that the agent spent a lot of its time trying to use key strokes to advance the game, so I prompted Cursor to add keystroke support. Arrows to flip the cards and enter key for selection and screen navigation. This was a simple addition to the web app‘s UI that Cursor built into the project without any issues.

I tested the addition and immediately saw that this had been a missing feature all along. The interaction was a smooth and natural alternate control interface that should have been there at the start.

During the second round of testing, the agent still struggled with the interface. Strangely, this time the agent never attempted to use keystrokes and instead pawed and scraped around the site, failing to make much progress. At one point it seemed to go down a rabbit hole of trying to use a right click to make the game work.

It became clear at this point that what I was really using Agent Mode for was as a beta tester for my game. I was essentially watching over the agent's digital shoulder as it completely failed to enjoy playing my game.

Others reading this probably had this realization much sooner, so please forgive me!

With this "beta tester" idea in mind, I asked ChatGPT for feedback. It gave me several suggestions, including creating a one-time tooltip with instructions that would appear on screen. It even helpfully provided starter text for the tooltip.

I dropped the markdown file containing the tooltip text into my project and asked Cursor to review and plan for implementation. Cursor, with its deeper knowledge of the project than ChatGPT had, suggested two tooltip variants—one for desktop and one for mobile. After a few iterations to refine the tooltip's appearance, I was satisfied with the result and pushed to origin. Vercel deployed the new version of the site, and I returned to ChatGPT.

In my last blog I mentioned how iterative meta prompting seemed very “help me help you.” This experience of ChatGPT recommending tooltips to help Agent Mode navigate my game felt like the opposite side of that coin, with ChatGPT explaining how I could help it help me build a decent interface.

Testing round 3

I started a new chat in ChatGPT and prompted it to perform the test again. I started a new chat because I wanted the agent to start clean — to be a new user. With the tooltip showing up on screen in the agent’s virtual machine window, I had high hopes for this round of testing.

Then something amazing happened: the agent actually started playing the game.

Turns out the agent is not great at identifying AI-generated images, even though it seems to have ideas about AI generated image ‘tells’ like unusual symmetry:

Seriously, the agent was bad at this game:

Out of five rounds of play, the agent would only choose the AI generated image correctly one or two times.

Final Feedback from the Beta Tester

For me, the real value of this test was recognizing that Agent mode could function as a beta tester and provide actionable feedback. When I finished my final round of testing, I asked for a final assessment of the game. Was it fun?

Here's its assessment. ChatGPT’s choice of emoji says it all!

😐 Enjoyment

Strengths:

Quick, low-friction play: no account, no login, just straight into the game.

Each round takes a few seconds, making it bingeable.

Weaknesses:

No real sense of progression, story, or stakes.

No contextual feedback or visual reward for success.

Without explanations, it feels more like guessing than learning.

Overall:

The game is moderately enjoyable for 5–10 rounds. It’s a great casual test of perception, but lacks the hooks (like streaks, leaderboards, rewards, or feedback) that would make it sticky or viral.

“Moderately enjoyable.” Tough but fair, though I think it’s funny that the chat chose to italicize moderately enjoyable. That’s not something I added for emphasis. Is ChatGPT learning how to be sarcastic?

Next steps

Though this experiment was basic, it reveals several promising applications for Agent Mode in ChatGPT:

The agent's experience seamlessly feeds back into the chat, allowing for post-mortem discussions about UX issues.

ChatGPT not only identifies problems but also provides specific coding solutions to fix the issues it encounters.

The logical next evolution would remove me from the process entirely, enabling automated UX testing directly within code assist tools like Cursor.

It remains unclear, however, if OpenAI intended Agent Mode to be used for this purpose. Between my second and third test rounds, my access to Agent Mode disappeared for at least 24 hours—possibly due to usage limits, but perhaps not.

Until I get more definitive information, I'll continue using Agent Mode to test my projects. Watching it struggle with my interface taught me valuable lessons, and its frustrated comments highlighted genuine problems I had overlooked.

I'm certain that major GUI testing vendors will eventually create tools with interfaces similar to what I improvised with ChatGPT—offering more detailed control, better visibility, and more actionable insights. But for a quick UX reality check on whether a feature makes intuitive sense, why not run it through Agent Mode and ask for feedback?

But what does it all really mean

While it was exciting and even stressful to watch the agent attempt to play my web game, I noticed that I was feeling something else as well. The experience was unnerving in a way that I had trouble understanding at first. Eventually I figured out that my uneasiness stemmed from the realtime nature of the agent mode window. I first felt a hint of this when Deep Research mode was released and we could see a sidebar with a point-by-point "chain of thought" driving the analysis. But that stream of thought still felt interior to the LLM. Agent mode, however, is the LLM venturing out into the (digital) real world.

I know that agentic tools operating keyboards and mice to navigate the web fails to meet any definition of ‘embodied’ AI, but it doesn’t feel quite as ‘disembodied’ as before. Just a few days back, someone documented seeing Agent Mode encountering an "Are you a robot?" captcha and clicking "no".

The AIs are here with us on the web, right now, struggling with unintuitive interfaces, flailing around and madly clicking on unresponsive controls, alongside the rest of us.